Tactile representation of an artwork

For People with Visual Impairment

An artwork must above all have outlines highlighted to detect the objects represented, their number and their location relative to the other objects that are part of this work. To distinguish the objects in the image (foreground and second plane), the simplification of the image must allow a better understanding for the observer who will thus have knowledge of the borders and categories of objects represented.

Perspective, a visual element, hinders recognition. A drawn object, simply highlighted (2D ½) has little to do with the tactile experience of this object. The projection of an object on a surface always corresponds to visual cues. Its recognition depends on the cultural assumptions of our memory. So you have to learn to interpret this type of image. A tactile representation must be simplified compared to a visual representation by keeping only the essential information allowing its recognition. It must "preserve the overall meaning" of the object represented [1]

Semantic Segmentation

Semantic segmentation groups in simplified categories parts of the image. Two approaches are possible to understand the simplification of a work of art: (1) Contour detection of visible elements of the artwork; (2) Segmentation of regions belonging to an element with respect to other regions.

These two classes of methods aim to simplify the image by eliminating or greatly simplifying the information contained (colors, details, etc.) and in separating the content into rough areas corresponding to the boundaries of known elements, to facilitate the interpretation of the spatial organization of the content of the image.

Contour detection

Contour detection consists of locating the edges of the protruding elements of an artwork versus pixel contrast. Classic methods (filters high pass / sharpening kernels, Canny, etc.) do not allow you to have complete contours because they are dependent on the lighting and texture of the images.

The HED (Holistically Nested Edge Detection) approach is a neural network architecture of end-to-end convolutional neurons [2] inspired by networks of fully convolutional neurons. Based on human perception in the search for outlines of objects, it uses different levels of perception, structural information, context.

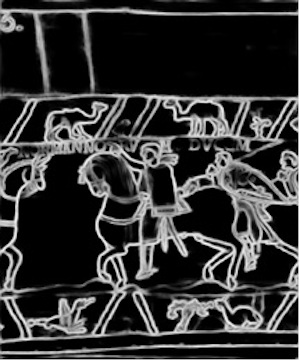

Scene 23, Bayeux Tapestry

Contours calculated with HED method on Scene 23, Bayeux Tapestry

Region Segmentation

Segmentation of an image consists of separating its elements into distinct objects. These methods include several approaches like (1) K-means, algorithm of grouping of regions (clustering). (2) Gaussian mixture model [3], learning method used to estimate the distribution of random variables by modeling them as a sum of several Gaussians, and is calculated iteratively via the Expectation-Maximization (EM) algorithm. (3) Deeplab V3 [4]: Network of deep convolutional neurons, used for semantic segmentation of images. It is characterized by an Encoding-Decoding architecture. (4) Slic Superpixels [5]: divides the image into groups of connected pixels with similar colors.

Our approach: A preprocessing is carried out to choose the object so semi-automatic: We apply the Mean-shift method to standardize the colors (less clusters), then the grabcut method to extract an object and reduce the error induced by spatial proximity.

On the extracted object, we apply GMM (because K-means induces a greater error) to obtain a segmentation into regions of our image.

Then, for a more intuitive tactile cutout with relevant details and relief, we apply HED (contours) to the GMM image (regions). We get contours simplified, smoother and easier to follow by touch.