Tactile representation of an artwork

Tactile representations are based on the contours (borders) of objects because they allow to locate them and delimit them in a scene. Following the contours, it is possible to imagine the object (create your mental image) and recognize it. In figure 1 (right), we presented the outlines of the scene "the raven and the fox", taken from the Bayeux Tapestry (left image). It can be observed that the tactile representations of different elements of this scene are simplified, we tried to preserve the essential characteristics allowing them to be recognized (a tactile gist).

Figure 1. Scene "the raven and the fox": image of the Bayeux Tapestry and its tactile representation with the outlines.

Figure 1. Scene "the raven and the fox": image of the Bayeux Tapestry and its tactile representation with the outlines.

By recognizing the objects represented, their number and their relative locations it is possible to understand a scene (to create a mental representation of a scene).

How to get a tactile gist ?

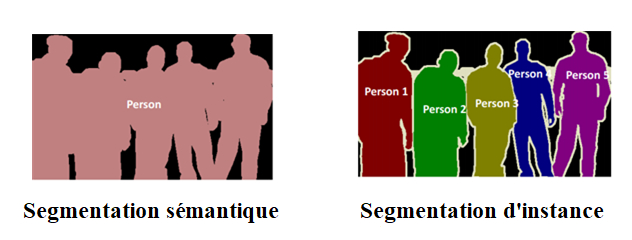

Our first approach to creating a tactile gist is based on semantic segmentation of a scene. This segmentation allows parts of the image to be grouped into categories of generic (simplified) objects. Figure 2 illustrates this concept.

Figure 2. Semantic segmentation and instance segmentation (source : https://ichi.pro/fr/segmentation-d-instance-en-une-seule-etape-un-examen-33996392156233).

Figure 2. Semantic segmentation and instance segmentation (source : https://ichi.pro/fr/segmentation-d-instance-en-une-seule-etape-un-examen-33996392156233).

Two approaches are most often considered to semantically segment an image:

- Segmentation into contours

- Segmentation into regions

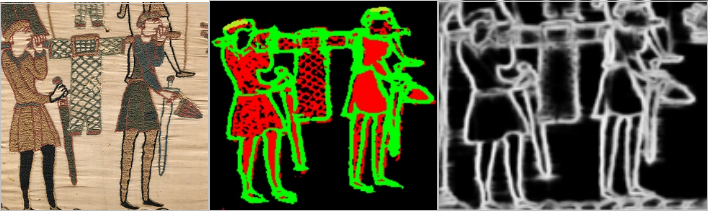

Figure 3 (Image on the left) shows scene 37 of the Bayeux Tapestry, the image in the center gives the results of its segmentation into regions (split-and-merge) and the one on the right gives its representation in contours (the HED algorithm, Holistically Nested Edge Detection, a convolutional neural network).

Figure 3. Segmentation into regions (center image) and outlines (right image) of scene 37 of the Bayeux Tapestry.

Figure 3. Segmentation into regions (center image) and outlines (right image) of scene 37 of the Bayeux Tapestry.

Creation of 3D and 2.5D models

3D and 2.5D (or raised) models are another way to access the content of painting images. Our approach to mock-up is based on additive manufacturing (or 3D printing).

Our object model to be printed results from a combination of 3D reconstruction of a work from its 2D image acquired with a conventional camera, and 3D information obtained using the stereophotometric technique extended to image acquisition through glass.

Figure 4 (taken from scene 23 of the Bayeux Tapestry) shows Duke William of Normandy (image on the left), the visual/tactile gist of the figure of Guillaume (image in center) and a relief representation (2.5D) of Duke William (image on the right).

Figure 4. Presentations of Duke William the Conqueror (from left to right): image of the Tapestry, visual gist and relief image (2.5D).

Figure 4. Presentations of Duke William the Conqueror (from left to right): image of the Tapestry, visual gist and relief image (2.5D).

Figure 5 (taken from scene 1 of Bayeux Tapestry) shows King Edward(image on the left), the visual & tactile gist of the king's person and the relief card (image on the right) estimated by stereophotometry (15 million pixels each).

Figure 5. Different representations of King Edward (scene 1 from Bayeux Tapestry).

Figure 5. Different representations of King Edward (scene 1 from Bayeux Tapestry).

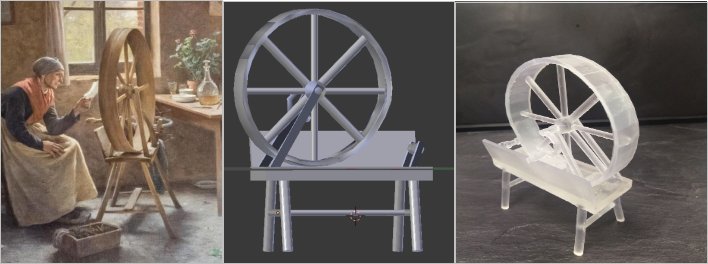

Figure 6 taken from the painting kept at the Martainville Museum (image on the left) allowed to create the model (in Blender, image in the center) of the spinning wheel printed in 3D(image on the right).

Figure 6. Spinning wheel (Martainville museum) and its different representations.

Figure 6. Spinning wheel (Martainville museum) and its different representations.